Presentation on Bedrock TM1

Posted: November 27, 2012 Filed under: Bedrock, best practice, Cognos TM1 Leave a commentBedrock TM1 has been a long favorite of mine, a real game changer for TI intensive TM1 models . If you haven’t yet done so I urge you to check out its site, download the best practice guides and start using this open toolset. If you are already using it version 2.0. is out with various refinements.

Below my Prezi presentation delivered at the Cubewise Conference providing some context and answers to the why and how questions of using Bedrock TM1.

Alternatively Bedrock TM1 on prezi.com.

TM1 User Conference is coming to Europe

Posted: September 16, 2012 Filed under: Cognos TM1 | Tags: conference Leave a commentCubewise has a pretty good track record in organising TM1 conferences covering both the cutting-edge and best practices in how to make the most of using this tool.

For the very first time the TM1 User Coference is coming to Europe with the following agenda. Also yours truly will host a session on Bedrock in the afternoon.

Key Highlights:

– Video presentation by Manny Perez – the creator of TM1

– In-depth looks at TM1 10.1 and Cognos Express 10

– “Big TM1” : How does TM1 handle large Data Volumes (Presentation by Peter Dailey, from Target Australia)

– “Big TM1” : How to manage large and complexTM1 projects (Client Case Study)

– Expert content for Developers / Administrators, End Users, and Strategic Stakeholders

– All of the expert focus without the spin that makes this conference the key event of the year for everyone having anything to do with TM1

– Essential networking with your peers over great food and drinks

Key Information:

– Where: The Hotel Bloom, Rue Royale 250 Koningsstraat, Brussels

– When: September 20, 2012 (all day event)

Register here: http://www.cubewise.be/seminars-352.php

Case study: Cash Flow Forecasting at Euroports

Posted: September 18, 2011 Filed under: case study, Cognos Express, Cognos TM1 1 CommentAfter a long period of intense work it’s time for some knowledge sharing. We have gone a long way from a pilot with a novel approach to a live operational tool rolled out to include more than 40 entities.

A reassuring sign of creating a living system is business ownership: an increasing number of users and continuous flow of requests from every level (even CEO), taking advantage of available structures and data and using it in novel ways.

As the project has been featured at the Cubewise IBM Cognos TM1 User conference in Sydney I am happy to share the slides via Google Docs (original downloadable as pdf).

To read about the general approach in detail please refer to the article ‘Rethinking Cash Flow Forecasting – Building an Operational Tool with TM1‘.

Innovative and Valuable Short Term Cash Flow Modeling at Euroports Belgium

New Major Releases: Cognos Express 9.5 and TM1 9.5.2

Posted: April 6, 2011 Filed under: Cognos, Cognos Express, Cognos TM1, feature, new release Leave a commentThis time I would like to briefly cover two major releases of IBM’s Business Analytics offering. Having sat through various partner presentations and already installed these new releases at clients I can say this time it’s definitely bigger than just a version number increase.

On the Cognos Express platform

Let me put IBM into perspective regarding performance management (hold on for a bit as this is quite relevant for our review):

Apart from the obvious (IBM’s position as a leader) the interesting bit is who’s not on the list of the traditional BI vendors: Microsoft, Microstrategy, the upcoming challengers like Tableau and Qlikview. If you take a look at the BI platforms’ quadrant [link] this difference becomes quite apparent. For Performance Management applications you need some sort of planning component which at its very core is based on (OLAP) writeback, still a rather rare capability. While we’re at it I would strongly suggest to go for an environment (both on a software and logical level) where planning (incl. simulation) and analytical capabilities are not ‘far’ from each other. In real life such activities are not clearly separated either.

So what we have with Cognos Express 9.5 is a mature (2nd major version), purpose built and integrated platform featuring standardized reporting, ad-hoc analysis and planning coupled with the power and flexibility of an excel-friendly in-memory analytic server (TM1 – more on that later). If used well this can be truly powerful especially for mid-size companies the product is aimed at. With its price tag [link: http://blog.pervasivepm.com/2011/02/04/ibm-extends-cognos-express.aspx?ref=rss ] it is affordable and provides great value for the money.

Nevertheless we have not reached nirvana yet. I do have to agree with Stephen Few’s remarks [link: http://www.perceptualedge.com/blog/?p=915 ] that traditional BI companies are still not great at usability, data sense-making, collaboration and visualization. But after years spent with acquisitions and integration I see there is a new direction (and roadmap) to include innovations of the upcoming generation of Qlikview, Tableau and the like.

If you still feel an unfilled gap in your requirements take a look at the emerging desktop analytics products (like PowerPivot, shipped freely with MS Office 2010) but I would advise not to miss the opportunity a unified platform offers.

Cognos TM1, 9.5.2

I would like to avoid praising TM1 too much, as I am obviously biased. Let me just state that it is the swiss army knife of business modeling, planning and (calculation intensive) analytics. Technically it stands out being a 64bit capable in-memory MOLAP server with an extremely fast calculation engine. Practically this means query times of sub or few seconds at hundreds of megabytes of data and hundreds of chained business rules (defined through excel-like user-friendly expressions, not ‘code’) – real-time as data is entered or modified. If you haven’t done so yet check it out: this video [ link or embed: http://www.youtube.com/watch?v=Q8hWboy4lQY ] demonstrating its financial analytics capabilities would be a good start – fast forward to 2:50 to see it in action, but check the first half afterwards as well – it just makes more sense in this order.

I would like to avoid praising TM1 too much, as I am obviously biased. Let me just state that it is the swiss army knife of business modeling, planning and (calculation intensive) analytics. Technically it stands out being a 64bit capable in-memory MOLAP server with an extremely fast calculation engine. Practically this means query times of sub or few seconds at hundreds of megabytes of data and hundreds of chained business rules (defined through excel-like user-friendly expressions, not ‘code’) – real-time as data is entered or modified. If you haven’t done so yet check it out: this video [ link or embed: http://www.youtube.com/watch?v=Q8hWboy4lQY ] demonstrating its financial analytics capabilities would be a good start – fast forward to 2:50 to see it in action, but check the first half afterwards as well – it just makes more sense in this order.

Back in the days before Cognos acquired TM1 from Applix it has been reviewed separately in the (then) OLAP Survey, so to give you the feel about its reception I have included some charts from the 6th edition, 2007 – most of this still stands true today.

The reason the 9.5.2 release really stands out is scalability and performance improvement. TM1 has long been an enterprise product, but as most IT systems did not handle well high level of concurrent writes typical in a planning application during the budgeting period. In prior versions to ensure consistency reads were blocked until writes finished and new writes could not start before reads in the ‘queue’ finished as illustrated by the following diagram:

To work around these limits many models have been engineered (referred to as partitioning) to avoid this kind of lock ‘contention’.

Enter Parallel Interaction. As the name implies it enables parallel data entry and loading:

Metadata modifications (like dimension updates / hierarchy changes) still block, but that’s acceptable. To take advantage of PI it has to be explicitly enabled with the ParallelInteraction=T configuration option and ample extra memory and CPU capacity should be planned in, as the due to the individual versions or ‘snapshots’ of data views memory consumption is expected to rise between 10-30%. PI also makes good use of those extra cores found in today’s processors – a welcomed improvement as TM1 has historically been single threaded.

The improvements should become noticeable with tens of users, and significant (>80%) above hundred users. I am definitely planning to test it at customers so after the next budgeting cycle this article will likely get an update.

Project In Your Pocket: A Review

Posted: April 6, 2011 Filed under: agile, Cognos TM1, project management, project plan, Uncategorized Leave a commentThis is an absolute classic. In one image it tells so much of what can go wrong in a project, I would recommend to frame it and hang it on the wall of every meeting room.

I believe a project plan is first and foremost a communication tool. A tool, that is supposed to capture, integrate and disseminate the perspectives of all involved stakeholders along the who, why, what, when and how line. Gareth John Turner provides a pragmatic approach to covering it all. It’s particularly suited to smaller projects or any for that matter where the goal is to get the job done instead of pumping out PM documents conforming to some arcane methodology, nobody will ever read.

Don’t get me wrong, I am totally in favor of good project management, it really is a (the) cornerstone. Too often however it misses the point and lives a parallel life. I would suggest all my fellow colleagues to get this booklet as it is easy to read, enjoyable and doubles as a great primer in PM.

It has already been put to the test and established as the standard methodology for small BI projects at a leading global financial services company. Also worth mentioning: Mr. Turner is a senior TM1 Project Manager and Developer thus the PIP approach should definitely be considered for TM1 projects where managing ‘agility’ (quite often volatility) can be a major challenge.

Just like the book follows through an actual case study, this review ends with the one-pager PIP. Don’t forget it for the next meeting!

Rethinking Cash Flow Forecasting – Building an Operational Tool with TM1

Posted: April 3, 2011 Filed under: case study, Cognos TM1, financial analytics, planning 6 CommentsCash Flow Forecasting is getting the focus of CFOs and finance professionals. The economic crisis certainly puts the pressure on in terms of available cash and efficiency not to mention risks of late paying or defaulting customers.

Problems associated with common approaches

Accounting systems don’t provide foresight and their perspective – the reports output – are quite limited anyway. Thus the office of finance is forced to roll their own solution. More often than not, the tool used is Excel. Such approaches have several shortcomings. They are tiresome to maintain involving regular and intensive communication to gather all the required data and forecasts, suffer from all the too well known issues of spreadsheets and due to inherent limitations fail short to provide enough detail to be useful as an operational tool.

Business Intelligence (BI) and related technologies are not new to organizations facing these kinds of issues. There is a good chance IT has some queries or even a data mart in place to support the various data needs of finance. But does that live up to the expectations? Usually not or only to the limit of providing basic detailed data that has to be processed by business users according to their own business logic. And business users are just as their name implies business professionals. They rarely have the skills and tools available to efficiently process data – that’s supposed to be IT’s job.

Tool selection

a tool is needed that can handle massive data volumes and provides a straightforward, not-too-technical way to implement business’ logic. In this case IBM’s Cognos TM1 has been chosen being a specialist tool for these kind of problems and having quite a long and successful track record.

Once the tool is available it’s a matter of translation. It’s easy to get lost, illustrated by many IT (-logic) driven solutions providing inflexible data-centric results.

So what can CFOs do to alleviate this situation? It is critical to consider and embrace both aspects of the problem – both the business and the IT side and their interaction.

Rethinking cash conversion metrics – introducing Payment Behaviour Analytics

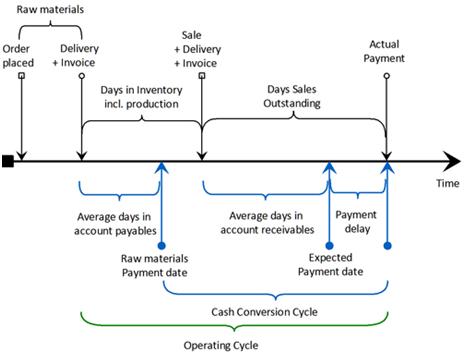

The cash conversion cycle provides the theoretical background and metrics used for cash flow forecasting:

The DSO (Days Sales Outstanding) and DPO (days Payable Outstanding) are the traditional building blocks to estimate when Accounts Receivable (AR) and Accounts Payable (AP) are realized. This approach has one significant drawback diminishing the forecast’s accuracy: both metrics are based on total (aggregated) data not taking individual customers or deals’ payment terms into account.

However today’s BI tools making invoice / document line level data available through connecting directly to the accounting system it is feasible to build

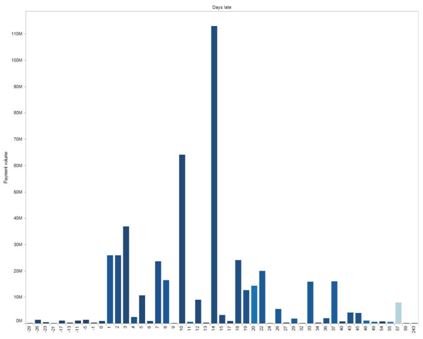

Payment Behaviour Analytics. This provides average payment terms, days late/early, receivables volumes on customer, entity, transaction currency, intercompany, etc. level. It’s certainly possible to analyze customers payment behaviour along the time axis thus also providing early warning for finance and accounting.

Given the right model and tool like TM1 this can be further expanded to analyze payment terms (whether or not they are related to the end-of-month) given this is not recorded in the operational systems. Some examples of the possibilities:

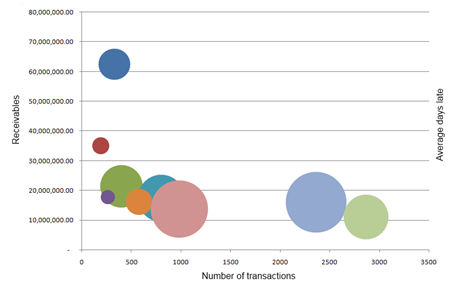

Payment behaviour histogram – average days late by receivables volume

Top customers – bubble size represents average days late

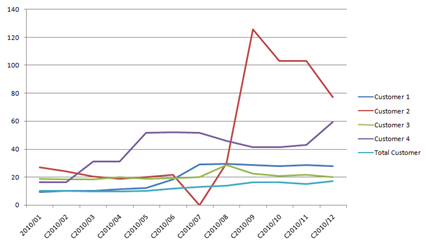

Average days late by selected top and total customers per month

The Cash Flow Forecast – as an operational tool

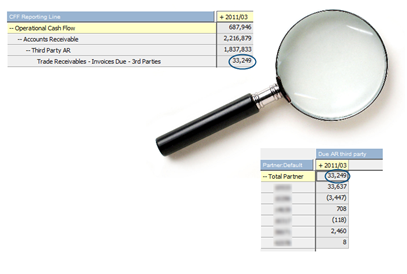

Using payment behavior instead of DSO/DPO it becomes possible to forecast outstanding receivables and payables significantly more accurately. There is another major gain: this logic can be tracked back, thus it’s feasible to drill through from forecasted due payments in a certain period to individual customers, or even invoices.

Drillthrough enables to check forecast on customer or even invoice level

This provides an unprecedented operational tool for the AR department, extending the Cash Flow Forecasting system’s reporting use into active monitoring, AR management and collection.

The Payables side can be readily influenced by the organization, hence it makes sense to be able to simulate how much headroom finance can gain this way.

Putting it all together: complete actual side is recorded in the books of the organization thus loadable into the Cash Flow system after proper mapping to report lines has been done (e.g. accounts to cost lines taking special ‘suppliers’ like government for taxes into consideration). AR and AP forecasting for all outstanding is covered in the Payment behavior paragraphs. Another rather straightforwardly automatable component of the Cash Flow Forecast is open orders from the operational systems where the same payment behavior based logic can be applied.

This already gives a fairly accurate picture of the expected cash flows for the short and midterm outlook. Needless to say it does not make sense to automate everything from a cost / benefit point of view. Some inputs – however significant their impact is – are easier managed in standardized spreadsheets and then uploaded into the forecasting system.

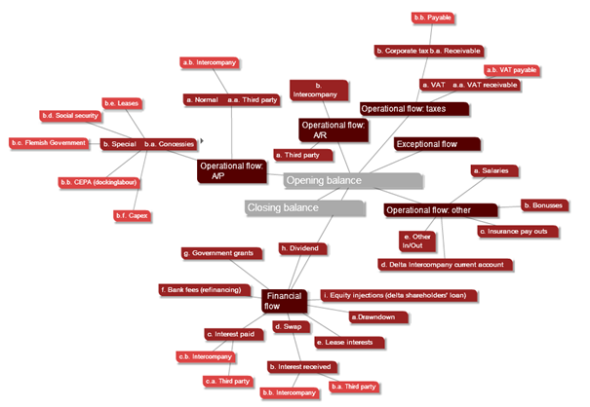

The following diagram provides an overview of the included Cash Flow Forecast components:

Currency handling certainly has to be thought of providing an additional dimension of analysis and simulation capability while providing the final Cash Flow Forecast in the chosen (reporting) currency.

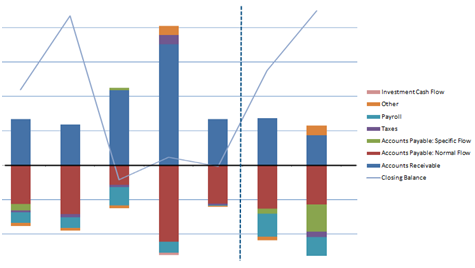

Cash Flow Forecast as stacked chart marking actuals->forecast cutover

Having the cash flow calculated by TM1 on a detailed, daily level makes reporting either on weeks or on months simple and straightforward putting an end to maintaining the Cash Flow Forecast in multiple report structures.

Using a sophisticated business intelligence tool can provide additional benefits: manual input values can be statistically forecasted based on a moving average algorithms or calculated as simple proportional change using data spreading functionality.

The later functionality could become quite handy if there are no or no recent budget figures available for forecasting; Projecting sales for one is likely to be a good candidate for such an approach.

Project and implementation overview

No article would be complete without reviewing the internal and external resources and time required to deliver such a Cash Flow Forecast.

The typical timeline for such projects using in-memory (like IBM Cognos TM1) technology lies between 1 and 3 months. By the end of the first month a working proof of concept is expected to be ready. The technical platform used was Cognos Express providing additional reporting capabilities on top of TM1.

High level dataflow

Involvement of finance has a key impact on the bottom line, in fact it is a necessity as the cash flow forecast is not just finance’s responsibility but many of the Cash Flow Forecast’s building blocks are managed in-house. Best practice is to not only own the data and reports but have reasonable control and skills in using the tools to be able to timely and efficiently meet the organization’s continuously changing requirements.